In the Zabbix monitoring system, a warning alert occurred on the nvidia-container-toolkit pod of a GPU node.

The pod status was CrashLoopBackOff.

At first glance, it appeared to be a simple pod configuration issue.

However, log analysis revealed that the root cause was a corruption of the containerd socket file type, which is part of Kubernetes’ container runtime.

This post describes the troubleshooting process for this issue and explains in detail the role containerd plays in Kubernetes and GPU environments, as well as the impact when failures occur.

1. Background Knowledge: The Role and Importance of Containerd

Before troubleshooting, it is necessary to understand the role containerd plays in a Kubernetes cluster, especially on GPU nodes.

1.1 Role in Kubernetes Architecture (CRI)

The Kubernetes agent, kubelet, does not control containers directly.

Instead, it issues commands to the runtime through a standard interface called CRI (Container Runtime Interface).

- Containerd: A lightweight, standardized container runtime separated from Docker. It is responsible for image pulling, network namespace creation, and container lifecycle management.

- Communication method:

Kubelet communicates with containerd via gRPC over the socketunix:///run/containerd/containerd.sock.

If this socket is blocked, the node is effectively in a brain-dead state.

1.2 Relationship with GPU Workloads (NVIDIA Runtime)

On GPU nodes, containerd is not just an execution engine.

It acts as the core gateway for GPU resource allocation.

- NVIDIA Container Toolkit:

When a pod creation request arrives, it modifies the containerd configuration to inject NVIDIA runtime hooks. - Runtime Hook:

When a container starts (prestart), containerd calls the hook to mount GPU driver libraries (libnvidia-ml.so) and device files (/dev/nvidia0) into the container.

If containerd is not functioning properly:

- Kubelet cannot create pods.

- GPU drivers are not injected into containers, causing

nvidia-smito fail. - CNI plugins are not configured, potentially paralyzing networking on the entire node.

2. Failure Symptoms

💡 Troubleshooting Guide

This post describes a containerd-related incident and its resolution.

Do not blindly copy and paste commands.

Always check your own system logs first and adapt the steps to your operating environment.

Step 1: Check Logs (Diagnosis)

# journalctl -xeu containerd --no-pager

Step 2: Action Guidelines Based on Log Results

CASE A: "is a directory" error

- Diagnosis: The socket file has been corrupted into a directory (this case).

- Resolution: Perform only the socket directory removal procedure.

- Warning: Do not modify a healthy

config.toml.

CASE B: "timeout" errors only

- Diagnosis: The daemon is unresponsive (hang).

- Resolution: Do not touch configuration or files. Start with restarting containerd.

CASE C: "parse error" or "invalid character" errors

- Diagnosis:

config.tomlsyntax is broken. - Resolution: Back up

config.toml, then reinitialize or manually fix it.

Key point:

The resolution differs completely depending on what the logs indicate.

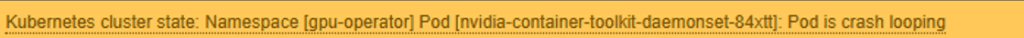

2.1 Zabbix Alert and Pod Status

- Trigger: K8s Pod Crash Looping

- Target: gpu-operator/nvidia-container-toolkit-daemonset

NAME READY STATUS RESTARTS AGE

nvidia-container-toolkit-daemonset-84xtt 0/1 CrashLoopBackOff 681 2d

2.2 Pod Log Analysis

level=warning msg="Error signaling containerd ... dial unix /run/containerd/containerd.sock: connect: connection refused"

A typical connection refused occurs when the process is down.

However, systemctl status containerd showed the process was active.

3. Deep Analysis and Root Cause Identification

3.1 Node System Log Inspection

# journalctl -xeu containerd

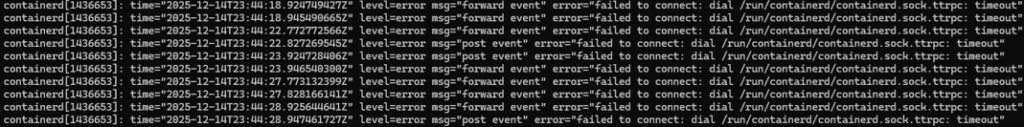

3.2 Root Cause: TTRPC Timeout and SIGHUP Storm

- Symptom:

The containerd process was running, but its internal communication protocol (ttrpc) timed out and became unresponsive. - Cause:

Thenvidia-container-toolkitpod restarted more than 680 times.

Each restart sends a SIGHUP signal to containerd to reload configuration. - Conclusion:

Excessive SIGHUP signals caused by repeated crashes likely pushed containerd into a deadlocked state.

3.3 First Attempt: Restarting Containerd

# systemctl restart containerd

The service failed with exit code 1.

4. Troubleshooting Step 1: Restoring Containerd Configuration (config.toml)

Repeated crashes of nvidia-container-toolkit may have caused duplicated or incomplete runtime configuration to be applied to /etc/containerd/config.toml, preventing containerd from starting.

4.1 Resetting the Configuration

# systemctl stop containerd

// Mandatory backup. Restore on failure.

# mv /etc/containerd/config.toml /etc/containerd/config.toml.bak

# containerd config default > /etc/containerd/config.toml

// must be set to true

# sed -i 's/systemdCgroup = false/systemdCgroup = true/' /etc/containerd/config.toml

4.2 Service Start (Failed)

# systemctl start containerd

Job for containerd.service failed because the control process exited with error code.

See "systemctl status containerd.service" and "journalctl -xeu containerd.service"

5. Troubleshooting Step 2: Socket File Corruption

5.1 Restart Failure Logs

# journalctl -xeu containerd

...

level=info msg=serving... address=/run/containerd/containerd.sock.ttrpc

containerd: failed to get listener for main endpoint: is a directory

systemd[1]: containerd.service: Main process exited, code=exited, status=1/FAILURE

5.2 Root Cause: "is a directory"

- Normal state:

/run/containerd/containerd.sockmust be a socket file. - Failure state: The path was a directory.

Failure sequence:

- containerd stopped or hung and the socket file disappeared.

- A pod attempted to mount the path via

hostPath. - Kubernetes created a directory because the file did not exist.

- containerd later failed to start because a directory occupied the socket path.

6. Final Resolution

6.1 Removing the Invalid Directory and Recovering Containerd

Run on the affected node only.

// 1. Verify file type

# ls -ld /run/containerd/containerd.sock

drwxr-xr-x 2 root root ... /run/containerd/containerd.sock

// 2. Stop containerd and remove directory

# systemctl stop containerd

# rm -rf /run/containerd/containerd.sock

// 3. Start services

# systemctl start containerd

# systemctl restart kubelet

// 4. Verify socket creation

# ls -ld /run/containerd/containerd.sock

srw-rw---- 1 root root ... /run/containerd/containerd.sock

6.2 Pod Recovery

# kubectl delete pod nvidia-container-toolkit-daemonset-84xtt -n gpu-operator

7. Impact Analysis

- Node control loss: kubelet could not communicate with the runtime.

- GPU functionality loss: NVIDIA runtime hooks failed,

nvidia-smifailed. - Zombie processes and network isolation due to orphaned shims and broken CNI state.

8. Handling Left-over Processes

If logs show Found left-over process (containerd-shim):

// Forcefully terminate containerd-related processes

# pkill -9 -f containerd

If pkill is unavailable:

# ps -ef | grep containerd | grep -v grep | awk '{print $2}' | xargs -r kill -9

9. Conclusion

When CrashLoopBackOff occurs, do not stop at pod logs.

Always inspect system-level logs.

In environments using hostPath, socket paths can be corrupted into directories during runtime restarts, and recognizing this behavior is critical for fast recovery.

🛠 마지막 수정일: 2025.12.17

ⓒ 2025 엉뚱한 녀석의 블로그 [quirky guy's Blog]. All rights reserved. Unauthorized copying or redistribution of the text and images is prohibited. When sharing, please include the original source link.

💡 도움이 필요하신가요?

Zabbix, Kubernetes, 그리고 다양한 오픈소스 인프라 환경에 대한 구축, 운영, 최적화, 장애 분석,

광고 및 협업 제안이 필요하다면 언제든 편하게 연락 주세요.

📧 Contact: jikimy75@gmail.com

💼 Service: 구축 대행 | 성능 튜닝 | 장애 분석 컨설팅

📖 E-BooK [PDF] 전자책 (Gumroad):

Zabbix 엔터프라이즈 최적화 핸드북

블로그에서 다룬 Zabbix 관련 글들을 기반으로 실무 중심의 지침서로 재구성했습니다.

운영 환경에서 바로 적용할 수 있는 최적화·트러블슈팅 노하우까지 모두 포함되어 있습니다.

💡 Need Professional Support?

If you need deployment, optimization, or troubleshooting support for Zabbix, Kubernetes,

or any other open-source infrastructure in your production environment, or if you are interested in

sponsorships, ads, or technical collaboration, feel free to contact me anytime.

📧 Email: jikimy75@gmail.com

💼 Services: Deployment Support | Performance Tuning | Incident Analysis Consulting

📖 PDF eBook (Gumroad):

Zabbix Enterprise Optimization Handbook

A single, production-ready PDF that compiles my in-depth Zabbix and Kubernetes monitoring guides.

답글 남기기

댓글을 달기 위해서는 로그인해야합니다.