Operating a Kubernetes cluster in a completely disconnected (air-gapped) environment requires more than just installation.

All operational components — OS packages, container images, backup and recovery systems, and monitoring — must circulate entirely within the internal network.

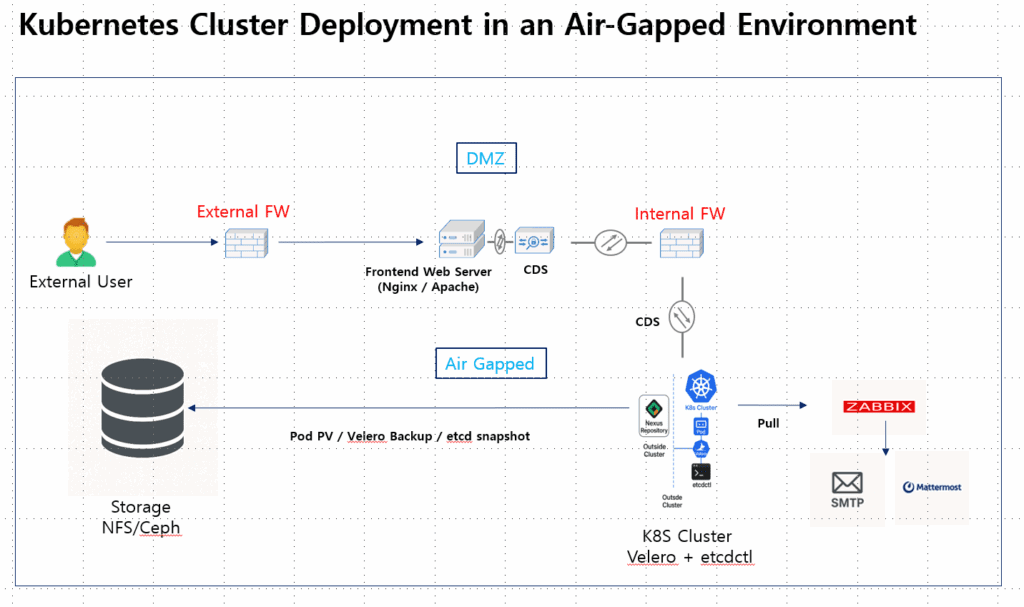

The following design illustrates a practical Kubernetes cluster architecture applicable to real-world air-gapped environments.

1. Overall Architecture

This architecture is built around a three-layer security boundary:

- DMZ (Demilitarized Zone): A buffer zone between external users and the internal network

- Internal Network: Where the Kubernetes cluster, storage, and monitoring systems reside

- Data Exchange Zone: A tightly controlled link for limited data transfer between DMZ and internal network

External users can never directly access the internal network.

All files (packages, images, backups, etc.) move only through an approved data-transfer mechanism.

2. Component Design

(1) DMZ Zone

⦿ Frontend Web Server (Nginx / Apache)

The only entry point for external users.

Requests are processed at the DMZ web tier and never directly forwarded to internal systems.

Any interaction with internal applications occurs solely through the data-transfer solution.

⦿ Data-Transfer Solution

Controls one-way or bi-directional transmission between the external and internal networks.

Usually implemented as file-based gateways (SFTP or dedicated data-bridge appliances).

In Kubernetes operations, it’s used for importing and exporting container images, packages, and backups under strict supervision.

(2) Internal Network (Air-Gapped Zone)

All critical components operate entirely inside the internal network, without any external connectivity.

⦿ Kubernetes Cluster

- Fully isolated and operates independently of the Internet

- Pulls all required images from the internal Nexus repository

Core Components:

- etcd: Cluster state storage

- Velero: Resource and PV backup

- Nexus Repository: Image and package repository

- Zabbix Agent: Node and application monitoring

⦿ Nexus Repository

Serves as the central dependency hub for all Kubernetes operations.

It replaces external Internet access by providing locally mirrored packages and images.

| Repository Type | Purpose |

|---|---|

| Docker (OCI) | Kubernetes components and application images |

| Helm Repo | Helm chart distribution |

| APT / YUM Repo | OS and runtime packages |

| PyPI / npm, etc. | Development language dependencies |

The repository is updated periodically by mirroring from an external source,

then imported into the internal Nexus either through a data-transfer gateway or via physical media (e.g., USB).

This ensures up-to-date packages and security patches, even in a fully isolated network.

⦿ Storage (Ceph / NFS)

Stores all persistent data such as Pod PVs, Velero backups, and etcd snapshots.

Ceph is recommended for high availability; NFS can be used for simpler setups.

⦿ Velero / etcdctl

- Velero: Performs full backup of Kubernetes resources and PVs

- etcdctl: Separately backs up cluster state in case Velero restore fails

- Both backups are stored on Ceph or NFS

- Recovery order:

Velero restore → etcd snapshot restore

(3) Monitoring and Alerting System

⦿ Zabbix Server

Deployed outside the Kubernetes cluster for resilience during cluster downtime.

Monitors:

- Node metrics (CPU, Memory, Disk)

- Pod-level resource usage

- Application metrics (HTTP response, error rate)

Metrics are collected via Zabbix Agent2 and API polling.

⦿ Alarm Notification

No external mail or chat servers are used.

Only internal SMTP or Mattermost instances deliver alerts.

Notifications are strictly confined to the internal network to prevent data leakage.

3. Backup and Recovery Strategy

In an air-gapped environment, remote backups via the Internet are impossible.

Therefore, automated in-cluster backups and redundant local storage are essential.

| Backup Target | Tool | Frequency | Storage |

|---|---|---|---|

| Kubernetes Resources & PV | Velero | Daily | Ceph / NFS |

| etcd State | etcdctl snapshot | Daily | Ceph / NFS |

| Zabbix Config / Templates | Export | Weekly | Internal Backup Directory |

Recovery Order:

1️⃣ Velero restore → 2️⃣ etcd snapshot restore

This combination ensures both resource and state consistency after recovery.

4. Package and Image Supply Chain

Because the network is isolated, all OS and application dependencies must be locally managed.

- Mirror the latest packages and images in the external network

- Docker Hub, Helm Repo, PyPI, apt/yum mirrors, etc.

- Transfer mirrored data into the internal Nexus

- via data-transfer gateway or physical USB media

- All Kubernetes nodes and applications pull exclusively from the internal Nexus

With this flow, the cluster can perform updates, deployments, and scaling

without relying on any external network access.

5. Design Principles Summary

- Clear Network Boundaries

Layered security: DMZ → Data-Transfer Gateway → Internal Firewall → Internal Network - Unified Supply Chain Management

Nexus consolidates packages, images, Helm charts, and language dependencies - Dual Backup System

Velero + etcdctl combination preserves both resource data and cluster state - Independent Monitoring

Zabbix hosted outside the cluster ensures visibility even during failure - Internal-Only Alert Channels

SMTP and Mattermost provide secure, isolated notifications

6. Conclusion

An air-gapped Kubernetes environment is essentially a cloud without the Internet.

It must function entirely on its own — capable of deployment, monitoring, and recovery

without external dependencies.

🛠 마지막 수정일: 2025.11.09

ⓒ 2025 엉뚱한 녀석의 블로그 [quirky guy's Blog]. All rights reserved. Unauthorized copying or redistribution of the text and images is prohibited. When sharing, please include the original source link.

💡 도움이 필요하신가요?

Zabbix, Kubernetes, 그리고 다양한 오픈소스 인프라 환경에 대한 구축, 운영, 최적화, 장애 분석,

광고 및 협업 제안이 필요하다면 언제든 편하게 연락 주세요.

📧 Contact: jikimy75@gmail.com

💼 Service: 구축 대행 | 성능 튜닝 | 장애 분석 컨설팅

📖 E-BooK [PDF] 전자책 (Gumroad):

Zabbix 엔터프라이즈 최적화 핸드북

블로그에서 다룬 Zabbix 관련 글들을 기반으로 실무 중심의 지침서로 재구성했습니다.

운영 환경에서 바로 적용할 수 있는 최적화·트러블슈팅 노하우까지 모두 포함되어 있습니다.

💡 Need Professional Support?

If you need deployment, optimization, or troubleshooting support for Zabbix, Kubernetes,

or any other open-source infrastructure in your production environment, or if you are interested in

sponsorships, ads, or technical collaboration, feel free to contact me anytime.

📧 Email: jikimy75@gmail.com

💼 Services: Deployment Support | Performance Tuning | Incident Analysis Consulting

📖 PDF eBook (Gumroad):

Zabbix Enterprise Optimization Handbook

A single, production-ready PDF that compiles my in-depth Zabbix and Kubernetes monitoring guides.

답글 남기기

댓글을 달기 위해서는 로그인해야합니다.