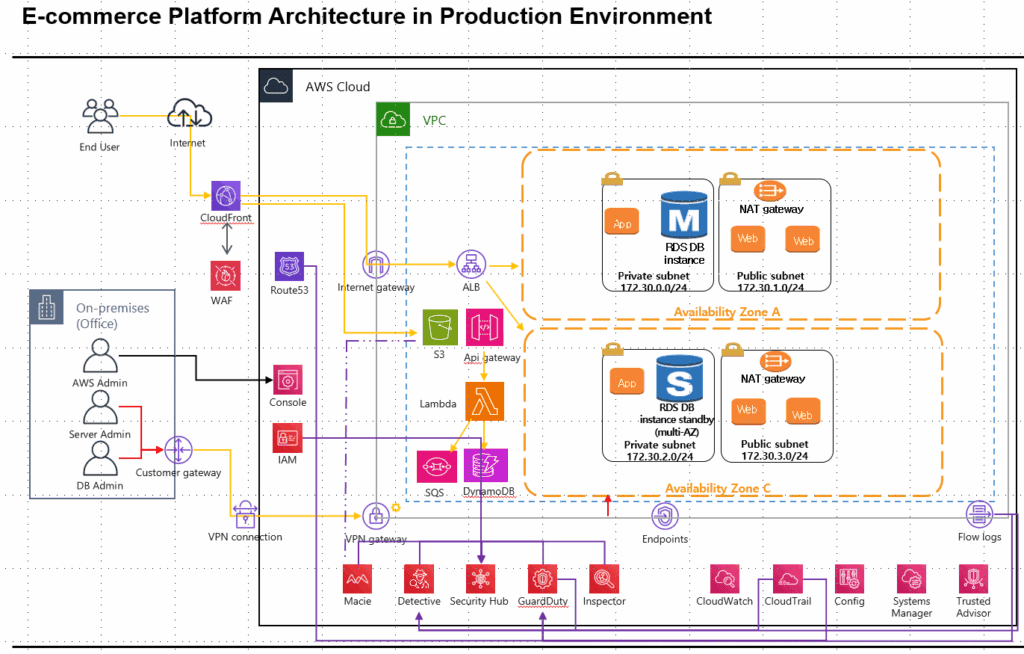

Based on the diagram below, this is a reference architecture for running an e-commerce service on the public cloud without Kubernetes. The goals are fourfold: high availability, low latency, strong security, and cost optimization—especially under burst traffic (e.g., flash sales). The examples assume AWS.

1) High-Level Overview

Network

- A single VPC with public/private subnet pairs across multiple AZs.

- Public subnets: ALB, NAT Gateway, optional Bastion.

Private subnets: EC2 (ASG), RDS Multi-AZ, internal services, VPC Endpoints. - Site-to-Site VPN from the on-prem management network to VGW (VPN Gateway). Ops/DB admin traffic stays on private paths only.

Traffic Entry

- CloudFront (CDN) in front with AWS WAF.

- Static assets use S3 as the origin. Dynamic requests route to ALB (EC2 app) as the origin.

- Selected APIs (webhooks/event-driven) take a serverless side path: API Gateway → Lambda → SQS/DynamoDB to absorb bursts.

Application

- ALB → Auto Scaling Group (EC2). Image delivery via ECR/CodeDeploy/SSM—any standard method is fine.

- Stateful data in RDS Multi-AZ (MySQL/PostgreSQL). Add read replicas when read traffic spikes.

Security & Observability

- WAF, GuardDuty, Macie, Inspector, Security Hub.

- CloudTrail, CloudWatch, VPC Flow Logs, AWS Config, and Systems Manager (SSM).

Endpoints

- Use Gateway/Interface VPC Endpoints (S3, DynamoDB, CloudWatch Logs, SSM, ECR, etc.) so internal calls avoid NAT and reduce cost.

2) Request Flows (Two Paths)

2-1. Static / Cacheable Content

Client → CloudFront (+WAF) → S3 (static) | ALB (cacheable dynamic)

- CloudFront caching, compression, HTTP/2/3, TLS 1.3, and Origin Shield reduce TTFB and origin load.

- Serve images/JS/CSS with long-term cache + hashed versions; cache misses are still absorbed at the edge.

2-2. Dynamic / Event-Driven

- Regular dynamic:

Client → CloudFront → ALB → EC2 (ASG) → RDS - Burst / async:

Client or external webhook → API Gateway → Lambda → SQS (queue) → backend consumers (EC2 or Lambda) → DynamoDB/RDS

- SQS buffers spikes (sales, pushes, batch settlements). Consumers scale out horizontally and drain safely.

- Heavy read pages (rankings/inventory) can move to DynamoDB + DAX (optional).

3) Availability Design

- Multi-AZ everywhere: ALB, distributed ASG, and RDS Multi-AZ (synchronous standby).

- Health checks & auto-recovery: ALB targets, EC2 status checks, automatic RDS failover.

- NAT Gateways per AZ so other AZs can carry traffic on failure.

If cost is a concern, reducing NAT dependence matters even more (see §6). - S3/CloudFront are inherently highly available. Route 53 can add health checks/failover records.

4) Latency Optimization

- CDN first: cache hit rate drives both performance and cost.

- ALB + keep-alive: connection reuse, tuned timeouts; compression via CloudFront (gzip/Brotli).

- Database is the slowest tier: cache reads and offload writes to async (SQS) to smooth peaks.

- VPC Endpoints keep internal calls close and off NAT.

- App autoscaling: step/predictive policies and pre-warm before early-morning sales.

5) Security Design

- Edge: CloudFront WAF rules, rate limiting, IP allow/deny, bot filtering.

- Network: strict public/private subnet separation; least-privilege security groups; DBs private only.

- Identity/Audit: least-privilege IAM, CloudTrail in all regions; immutable S3/log retention.

- Detection/Assessment: GuardDuty (threats), Inspector (vulns), Macie (sensitive data), unified in Security Hub.

- Ops access: VPN + IAM.

6) Cost Optimization — Especially for Bursts

The levers are NAT reduction, cache hit rate, async design, and pricing mix.

6-1. Cut NAT Spend

- Use VPC Endpoints aggressively: S3/DynamoDB (Gateway) and CloudWatch Logs/SSM/ECR (Interface)

→ large egress doesn’t traverse NAT (lower volume × lower rate). - Minimize outbound calls. For third-party calls, batch/async through Lambda where possible.

6-2. Protect Origins with CDN

- Static uses S3 origin; for dynamic, use short TTL + stale-while-revalidate patterns.

- Image resizing/serving via Lambda@Edge/CloudFront Functions reduces origin storage and data transfer.

6-3. Absorb Bursts with Queues

- Orders/notifications/settlement/webhooks: API GW → Lambda → SQS to flatten spikes.

- Consumers scale (ASG/Lambda) and prevent sudden DB pressure.

- DynamoDB: on-demand write to catch bursts + TTL/GSIs to keep costs in check; cacheable reads via DAX.

6-4. Compute Pricing Mix

- Cover 50–70% steady load with Savings Plans/Reserved Instances.

- Serve peaks with On-Demand plus async paths.

- Spot isn’t ideal for front-end web (interruption risk). Use it for async consumers/batch only.

6-5. RDS Cost/Performance Balance

- Start with RDS Multi-AZ (without premium IO). Add IO-Optimized/Provisioned IOPS only when you truly hit IO limits.

- Offload reads to read replicas and in-app/CloudFront caching to avoid DB over-sizing.

- For critical write spikes, buffer via SQS and commit in batches—often the biggest saver.

6-6. Observability Spend

- Log sampling, short hot retention, and lifecycle to Glacier.

- Enable VPC Flow Logs only where needed and filter before storage.

7) Component Checklists

VPC/Subnets

- AZ A/C pair. Public (NAT/ALB), Private (EC2/RDS/Endpoints).

- Routing: private subnets prefer endpoints; use NAT only for true external egress.

CloudFront + WAF

- Cache policies, compression, Origin Shield, managed rules + custom rate limits.

ALB + ASG (EC2)

- Health checks, scale-out on CPU/latency/queue length, warm up before deployments.

- Golden AMIs and minimal UserData to shorten instance boot time.

API Gateway + Lambda + SQS/DynamoDB (optional path)

- Separate the burst path. Set DLQs/retries and idempotency keys.

- DynamoDB on-demand with solid partition-key design.

RDS Multi-AZ

- Backups/snapshots, parameter groups, connection pooling, slow query metrics.

- If splitting reads/writes, handle routing/driver configuration explicitly.

Security/Operations

- Least-privilege IAM. SSM sessions. Secrets in Secrets Manager/Parameter Store.

- Pipe GuardDuty/Inspector/Macie into Security Hub; alert via CloudWatch Alarms.

8) Why “No Kubernetes”?

- ALB + ASG plus serverless achieves strong availability and elasticity without extra platform complexity.

- Queue-based async handles bursts more predictably and cheaply.

- Standardized ops (SSM/Inspector/Config/CloudTrail) and clean cost visibility are simpler to maintain.

9) Growth Path (as Traffic Matures)

- More reads: stronger global caching (CloudFront), DB replicas, or DynamoDB adoption.

- Bigger write spikes: expand SQS, scale consumers, batch commits.

- Lower DB latency / higher resilience: consider Aurora (incl. Serverless v2).

- More global users: CloudFront regional optimizations; Route 53 latency-based routing.

Summary

- Static via S3 + CloudFront, dynamic via ALB + ASG, and bursts via API GW + Lambda + SQS.

- RDS Multi-AZ for write availability/consistency; read replicas/caches for read scale.

- VPC Endpoints reduce NAT dependence; higher CDN cache cuts origin and transfer costs.

- Use Savings Plans/RI for steady load; handle peaks with async + On-Demand.

- Keep security strong at the edge (WAF) and with account-level detection/audit.

This design meets availability, latency, security, and cost goals while safely absorbing flash-sale–style burst traffic. Operations stay simple, costs remain predictable, and the scale path is clear.

🛠 마지막 수정일: 2025.11.09

ⓒ 2025 엉뚱한 녀석의 블로그 [quirky guy's Blog]. All rights reserved. Unauthorized copying or redistribution of the text and images is prohibited. When sharing, please include the original source link.

💡 도움이 필요하신가요?

Zabbix, Kubernetes, 그리고 다양한 오픈소스 인프라 환경에 대한 구축, 운영, 최적화, 장애 분석,

광고 및 협업 제안이 필요하다면 언제든 편하게 연락 주세요.

📧 Contact: jikimy75@gmail.com

💼 Service: 구축 대행 | 성능 튜닝 | 장애 분석 컨설팅

📖 E-BooK [PDF] 전자책 (Gumroad):

Zabbix 엔터프라이즈 최적화 핸드북

블로그에서 다룬 Zabbix 관련 글들을 기반으로 실무 중심의 지침서로 재구성했습니다.

운영 환경에서 바로 적용할 수 있는 최적화·트러블슈팅 노하우까지 모두 포함되어 있습니다.

💡 Need Professional Support?

If you need deployment, optimization, or troubleshooting support for Zabbix, Kubernetes,

or any other open-source infrastructure in your production environment, or if you are interested in

sponsorships, ads, or technical collaboration, feel free to contact me anytime.

📧 Email: jikimy75@gmail.com

💼 Services: Deployment Support | Performance Tuning | Incident Analysis Consulting

📖 PDF eBook (Gumroad):

Zabbix Enterprise Optimization Handbook

A single, production-ready PDF that compiles my in-depth Zabbix and Kubernetes monitoring guides.

답글 남기기

댓글을 달기 위해서는 로그인해야합니다.